|

| Image Source : lightcommands.com |

Voice assistant devices like Google Home and Amazon Alexa have become really popular devices and are viewed as the next big thing in home automation. The open nature of these platforms and the low cost of these devices have led to a huge number of companies coming out with home automation products compatible with either one or both of them. In fact, it is really hard to find electrical appliance companies who have not forayed into this market with their latest offerings.

A team of researchers at the University of Michigan and University of Electro-Communications, Tokyo have found a surprising way to 'hijack' voice assistants with light. This is made possible by a physical phenomenon that allows light to create acoustic pressure waves. The field of research that studies this phenomenon is called photoacoustics and the first work in this field was done by none other than Alexander Graham Bell in 1880.

So, to summarize, microphones can be made to react to laser light directed at them in the same way as they react to sound. Since voice assistants use sound as the interaction medium, this phenomenon allows them to be made to do so by light as well. The most scary part of this is that light waves are inaudible and so people will not be able to detect that a command is being sent to their device.

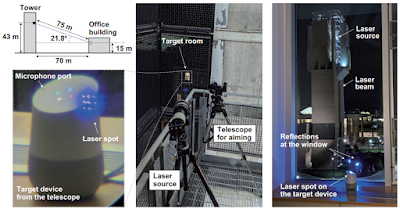

At the start of this article you can see a diagram illustrating how a laser directed from 75 meters away at a downward angle of 21 degrees and through a glass window was able to make a Google Home open the garage door. Below is a video of the same demonstration:

Now, many of you might be thinking that these devices do have voice recognition and that should be enough to protect your devices from following commands that are not your own. However, it seems that this feature is enabled by default only on smartphones and tablets and not on smart speakers. In addition, only the wake-up commands like "OK Google" and "Alexa" are verified by the devices and not the rest of the commands. To compound this problems it has been found that the verification is quite weak and can be fooled by online text to speech synthesis tools that can imitate people's voice. We have also seen demonstrations of technologies using machine learning to imitate people's voices to a high degree of accuracy.

The researchers were able to send commands to Siri one devices like the iPhone XR and iPad 6th Gen as well, so Apple devices are vulnerable to these hijackings as well.

Two drawbacks for hijackers and advantages for us are that this technology only works in Line of sight and there is no way to for the hijacking system to get the feedback about successful execution of commands from the device unless they are in the audible range of the device's speaker. Nevertheless, until companies come up with really secure counter measures, be wary of connecting devices that could compromise your security if hijacked.

No comments:

Post a Comment